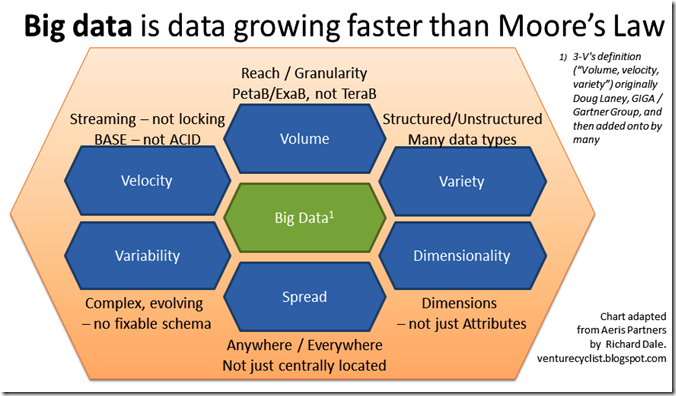

Doug Laney is the original creator of the 3V’s definition of Big Data – referring to volume, velocity or variety of data that is hard to handle with traditional data management tools and techniques. In August last year I proposed a better definition of Big Data as Data growing faster than Moore’s Law. Many others have talked about extending the 3V’s definition of big data, and one of the additions is to insist on a fourth V: “Value”. In my personal view this is somewhere between irrelevant and dangerous. Any data may or may not have value, and that value is highly context sensitive. If you want to know the weather tomorrow, then knowing the stock market closing price from 1897 is of no value. The beauty of big data is that while most of it may be irrelevant, the patterns that can emerge are of real interest and value. Furthermore, the value of big data may not become clear until long after it is created (only once we had collected uncountable tweets from the early years of Twitter did someone realize you might find information relevant to stock prices buried in the stream of “valueless” data).

D. Robinson posted a great article in December called Big Data- The 4 V's - The Simple Truth; Part 4 - Making Data Meaningful. This talks about the need for Veracity (is the data reliably recording what is going on) and the problems of Variability (where a system may record different values for the same physical activity on different occasions). However, even these are not defining characteristics of big data, but are interesting attributes of any data collections.

Instead, let me offer some other extensions to the 3V’s definition. You don’t need all of these to have big data, but the more you have, the more likely it is you are dealing with big data.